LetsTalk: Latent Diffusion Transformer for Talking Video Synthesis

2Institute of automation, Chinese Academy of Sciences

3Beijing National Research Center for Information Science and Technology, Tsinghua University

4AI Lab, Tencent 5Department of Automation, Tsinghua University

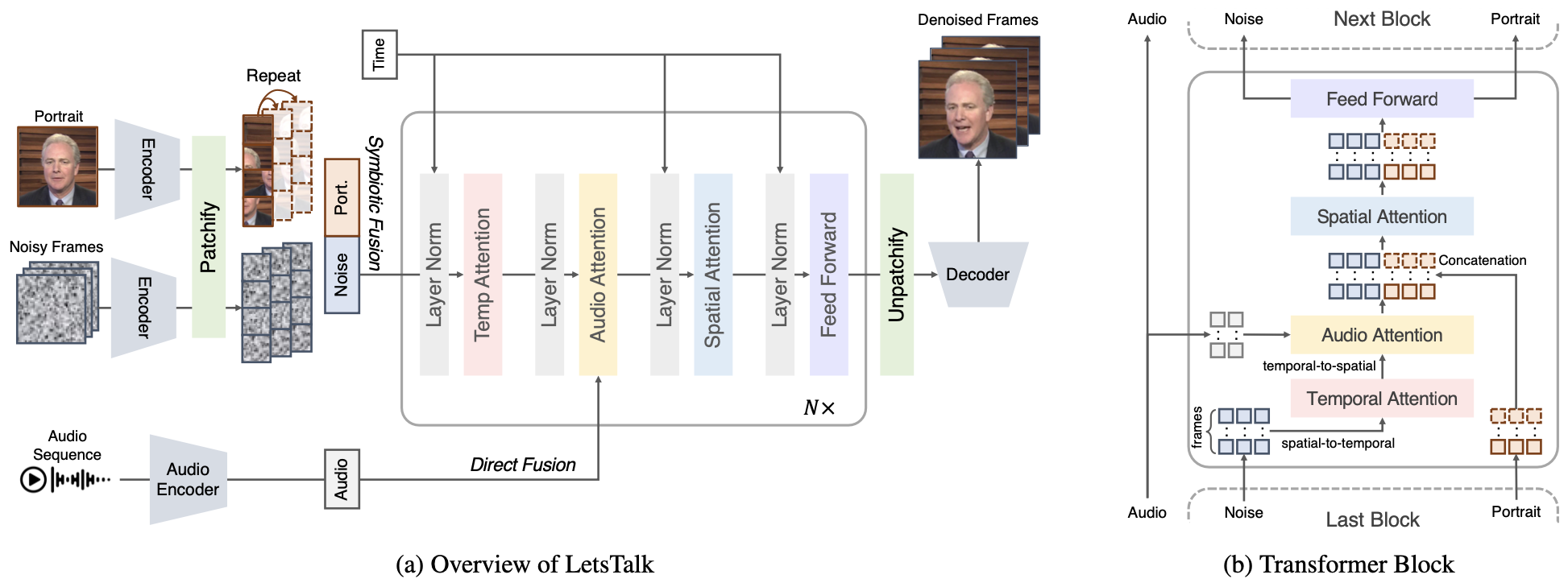

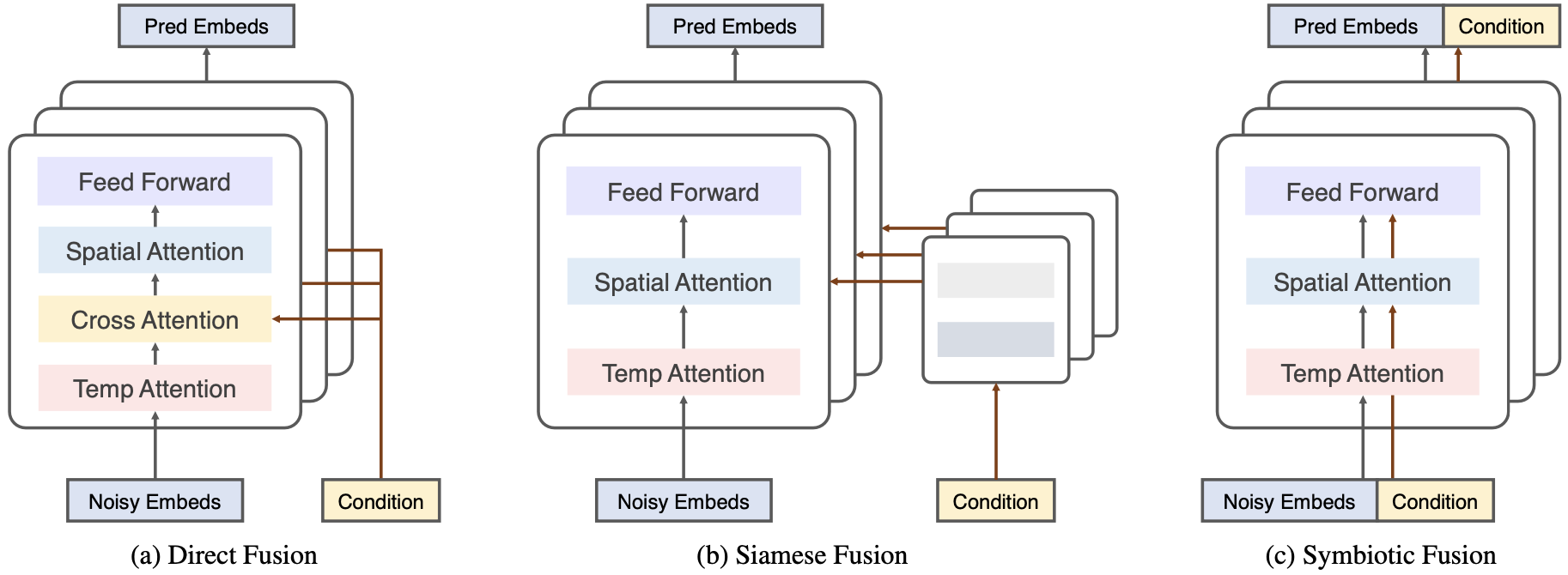

TL;DR: We present LetsTalk, an innovative Diffusion Transformer with tailored fusion schemes for audio-driven portrait animation, achieving excellent portrait consistency and liveliness in the generated animations.

We propose LetsTalk, a diffusion-based transformer for audio-driven portrait image animation. Left: Given a single reference image and audio, LetsTalks can produce a realistic and vivid video aligned with the input audio. Note that each column corresponds to the same audio. The results show that LetsTalk can drive consistent and reasonable mouth motions for the input audio. Right: Generation quality vs. inference time on the HDTF dataset, the circle area reflects the number of parameters of the method. Our LetsTalk achieves the best quality while also being highly efficient in inference, compared to current mainstream diffusion-based methods such as Hallo and AniPortrait. In addition, our base version LetsTalk-B achieves performance similar to Hallo with only 8 × fewer parameters.